Dive Brief:

- Nvidia sustained five consecutive quarters of triple-digit revenue growth amid a massive AI infrastructure building boom, according to the company’s Q2 2025 earnings report. The chipmaker had record quarterly revenue of $30 billion, up 122% year over year, during the three-month period ending July 28.

- As Nvidia expands its broader AI infrastructure business with the Blackwell rack system solution, its data center segment revenues grew 154% year over year to $26.3 billion, accounting for the lion’s share of the company’s total quarterly revenue.

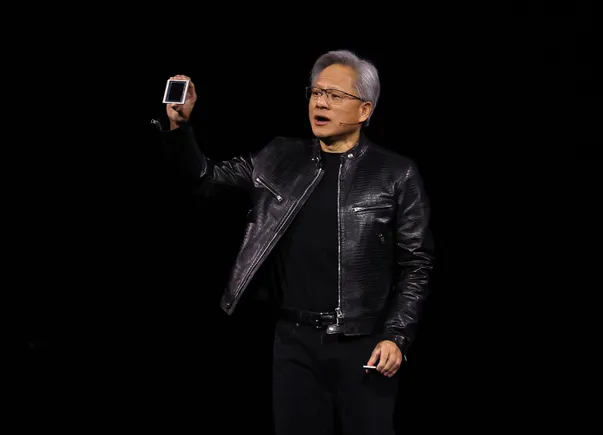

- “Data centers worldwide are in full steam to modernize the entire computing stack with accelerated computing and generative AI,” Nvidia President and CEO Jensen Huang said during the Wednesday earnings call. “People are just clamoring to transition the $1 trillion of established installed infrastructure to a modern infrastructure.”

Dive Insight:

Nvidia remains entrenched in a year-plus run as the leading provider of high-end AI processing power driving what Huang has dubbed “the next industrial revolution.”

Hyperscale cloud providers and generative AI model developers bought up Nvidia Hopper and Grace Hopper GPUs as they rolled off the production line.

Nvidia revenues soared to $30B in two years driven by an AI boom

Revenue by quarter, in billions

While signs of a general chip shortage have largely dissipated, orders for the Blackwell product line are piling up, according to Nvidia EVP and CFO Colette Kress.

“Hopper supply and availability have improved,” Kress said Wednesday. “Demand for Blackwell platforms is well above supply, and we expect this to continue into next year.”

The company recently “executed a change to the Blackwell GPU mass to improve production yields” and expects to start shipments in Q4, Kress added.

Forrester Senior Analyst Alvin Nguyen noted a modest improvement in the GPU stock.

“Hopper supply and availability improvements means increased semiconductor capacity, but probably not substantially so,” Nguyen said in an email. “With competitors like AMD and Intel catching up to Hopper levels of performance, Blackwell will be in higher demand by those wanting a competitive edge.”

AWS, Microsoft and Google, the three largest hyperscalers by market share, are racing to build out data center capacity. As enterprises and model builders devour existing GPU resources, cloud providers are pouring tens of billions of dollars into data center construction.

“If you just look at the world’s cloud service providers, the amount of GPU capacity they have available, it’s basically none,” Huang said. “They’re renting capacity to model makers. They’re renting it to start-up companies. And a generative AI company spends the vast majority of their invested capital into infrastructure so that they could use an AI to help them create products.”

While GPU capacity can be hard to source during LLM training, it’s typically available at other times, according to Nguyen. But the situation has put enterprise AI plans in a precarious spot.

“Since we know most enterprises are not getting GPU allocations, the lack of cloud availability means that costs will be higher and will cause them to scale back their AI ambitions,” Nguyen said. “If organizations cannot get AI services to experiment with and deploy AI capabilities, they will need to invest in something else.”